Enable Language Models

Language models are the core part of AI agents. They are responsible for understanding and generating human-like text. In Alchemist, before you will be able to use an agent team, you need to enable language models providers and select the models you want to use.

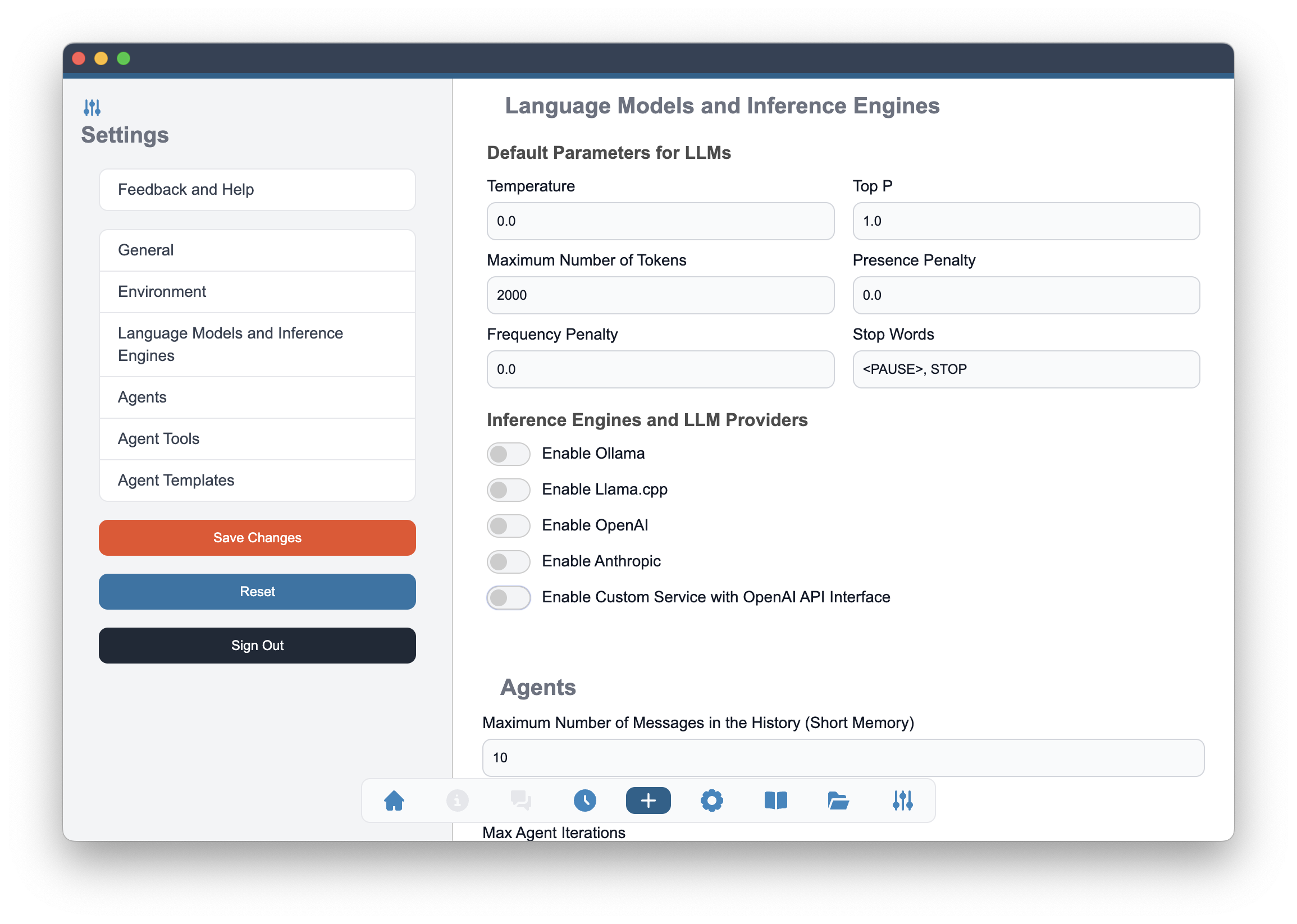

Language Models and Inference Engines section of the Settings allows you to set default parameters of the language models and enable several language model providers. Both, local language model engines and remote APIs are supported allowing you to run LLMs either on your system or subscribe to API of model provider.

Alchemist does not ship any language model.

The language models section starts with the settings that are common between different models. Those will be used as default initial values and you will be able to change them in the agent configuration.

Model parameters

When you enable language models, you can set the following parameters:

- Temperature: A value between 0 and 1 that controls the randomness of the model's output. Lower values make the output more deterministic, while higher values make it more random.

- Top P: A value between 0 and 1 that controls the diversity of the model's output. Lower values make the output more focused, while higher values allow for more diverse outputs.

- Maximum Number of Tokens: The maximum number of tokens to generate in the response. Upper bound of this value depends on the model choice.

- Presence penalty: A value between -2 and 2 that penalizes the model for generating tokens that are not present in the text. Higher values make the model more likely to generate new tokens.

- Frequency penalty: A value between -2 and 2 that penalizes the model for generating tokens that have already appeared in the text. Higher values make the model less likely to repeat tokens.

- Stop Words: A sequence of characters that, when generated, will stop the model from generating further tokens.

The values present in here are the default values. You will be able to change these values in the agent configuration screen and adjust them separately for every agent in the team.

Supported Inference Engines

Alchemist supports several inference engines for language models. You can choose the one that best fits your needs. The following engines are supported:

- Ollama https://ollama.com

- Llama.cpp https://github.com/ggml-org/llama.cpp

- OpenAI https://openai.com

- Anthropic https://anthropic.com

- OpenAI compatible APIs

To enable a specific engine, you need to toggle the corresponding switch in the Alchemist settings. Both local inference engines (Ollama and Llama.cpp) and remote APIs (OpenAI, Anthropic, and OpenAI compatible APIs) are supported.

Once you have enabled the desired engine, you can configure the parameters for that engine. The configuration options may slightly vary depending on the engine you choose.

Many model providers expose OpenAI compatible interface in addition to their own. These interfaces are provided for example by Google for Gemini, xAI for Grok or by DeepSeek. The Custom Service with OpenAI API Interface allows you add those providers.

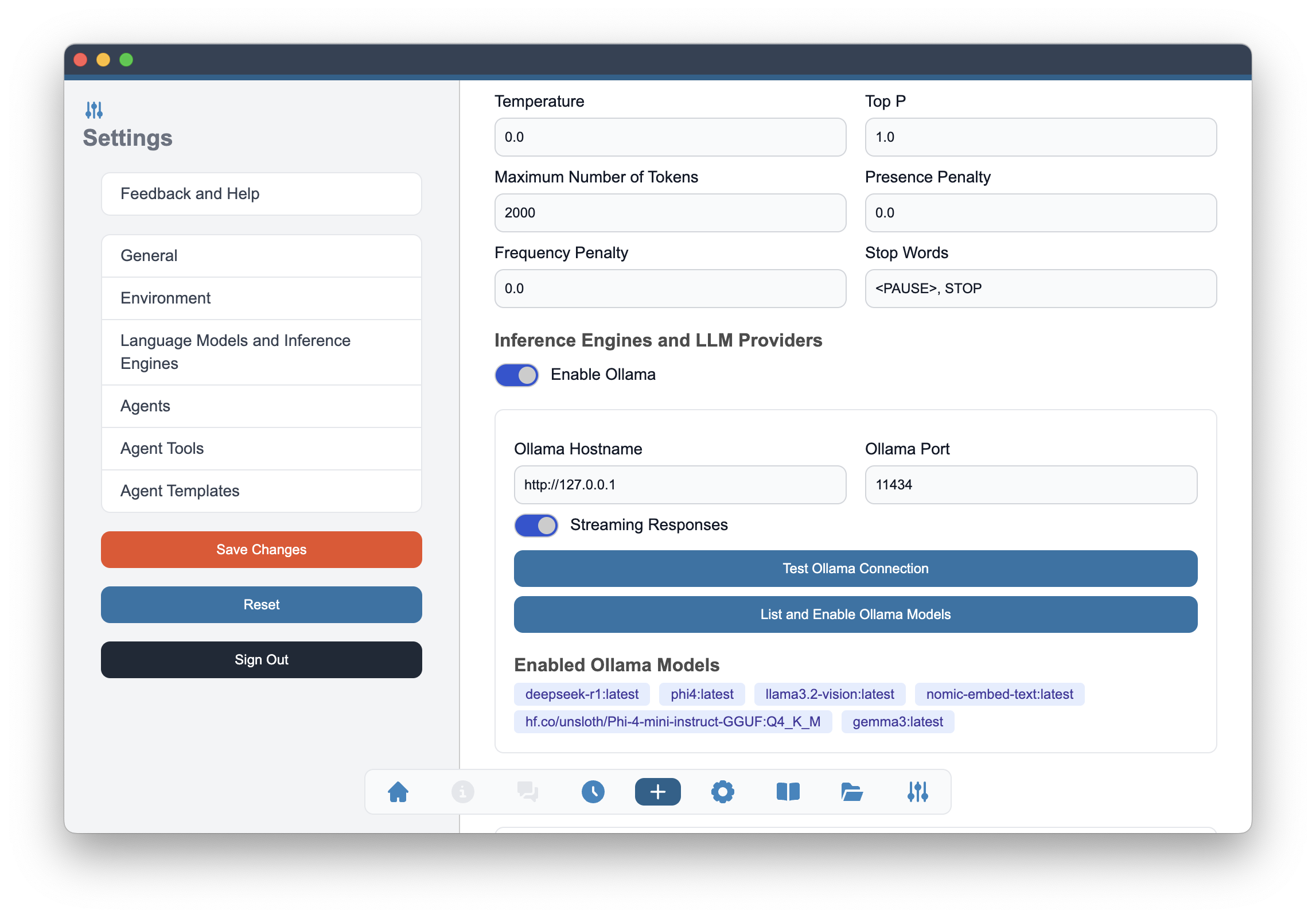

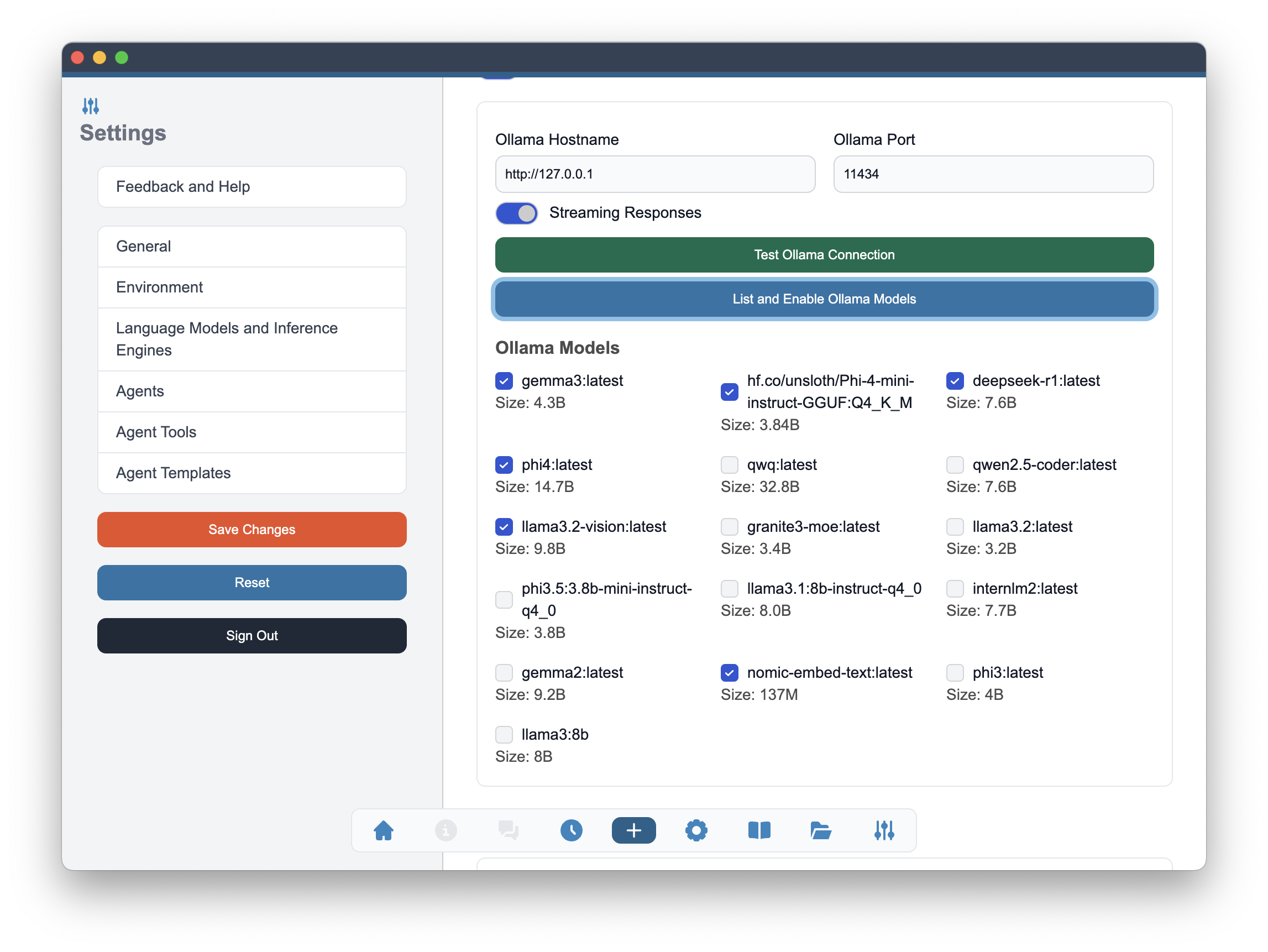

Ollama

Ollama is a local inference engine that allows you to run language models on your own machine. To use Ollama, you need to install it and then enable it in the Alchemist settings.

To install Ollama, follow the instructions on the Ollama download page. Once you have installed Ollama, you can download language models from Ollama Library and enable Ollama in the Alchemist settings.

After enabling Ollama, you can check the connection to the Ollama engine and list the models that are served by it. You can also select the models you want to use in Alchemist.

Fields Ollama Hostname and Ollama Port are used to configure the connection to the Ollama engine. By default, the hostname is set to http://127.0.0.1 and the port is set to 11434. If you have changed these values in your Ollama installation, make sure to update them in the Alchemist settings.

If you are using Ollama running on a different machine, you need to set the hostname to the IP address of that machine. Make sure that the Ollama engine is accessible from the machine where Alchemist is running.

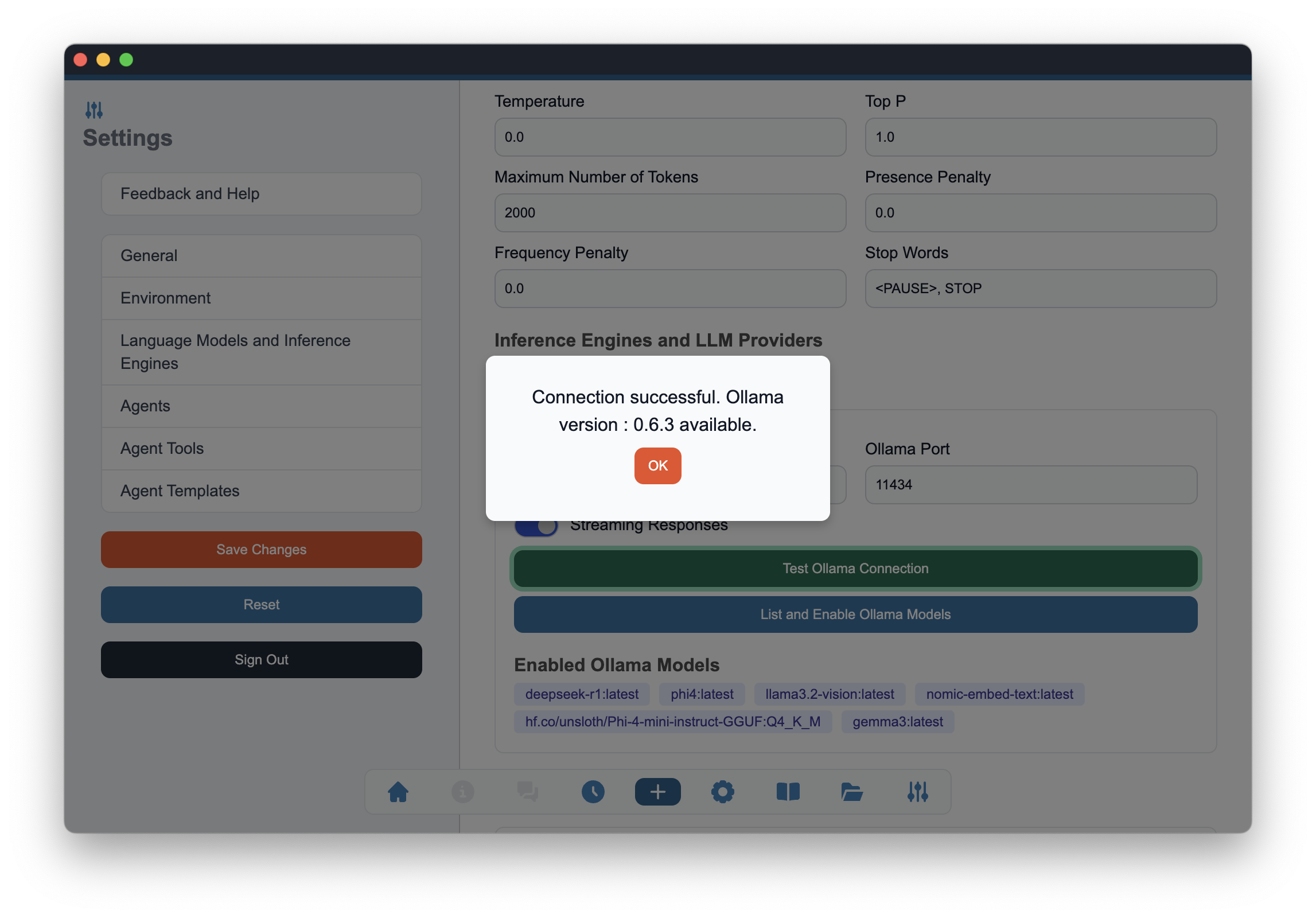

To check the connection to the Ollama engine, you can use the Test Ollama Connection button in the Alchemist settings. This will verify that the Ollama engine is running and accessible.

Once the connection is established, you can list the models that are served by the Ollama engine. This will show you all the models that are available for use in Alchemist.

To list the models, click on the List and Enable Ollama Models button in the Alchemist settings. This will display a list of all the models that are available in the Ollama engine.

Once the models are listed, you can select the ones you want to use in Alchemist. This will allow you to customize the language models that are available for your agents.

To select the models, check the boxes next to the models you want to enable. You can enable multiple models.

Streaming Responses option can be enabled in the Ollama settings. This will allow you to receive streaming responses from the Ollama engine. Streaming responses can be useful for long-running tasks or when you want to display the output as it is being generated.

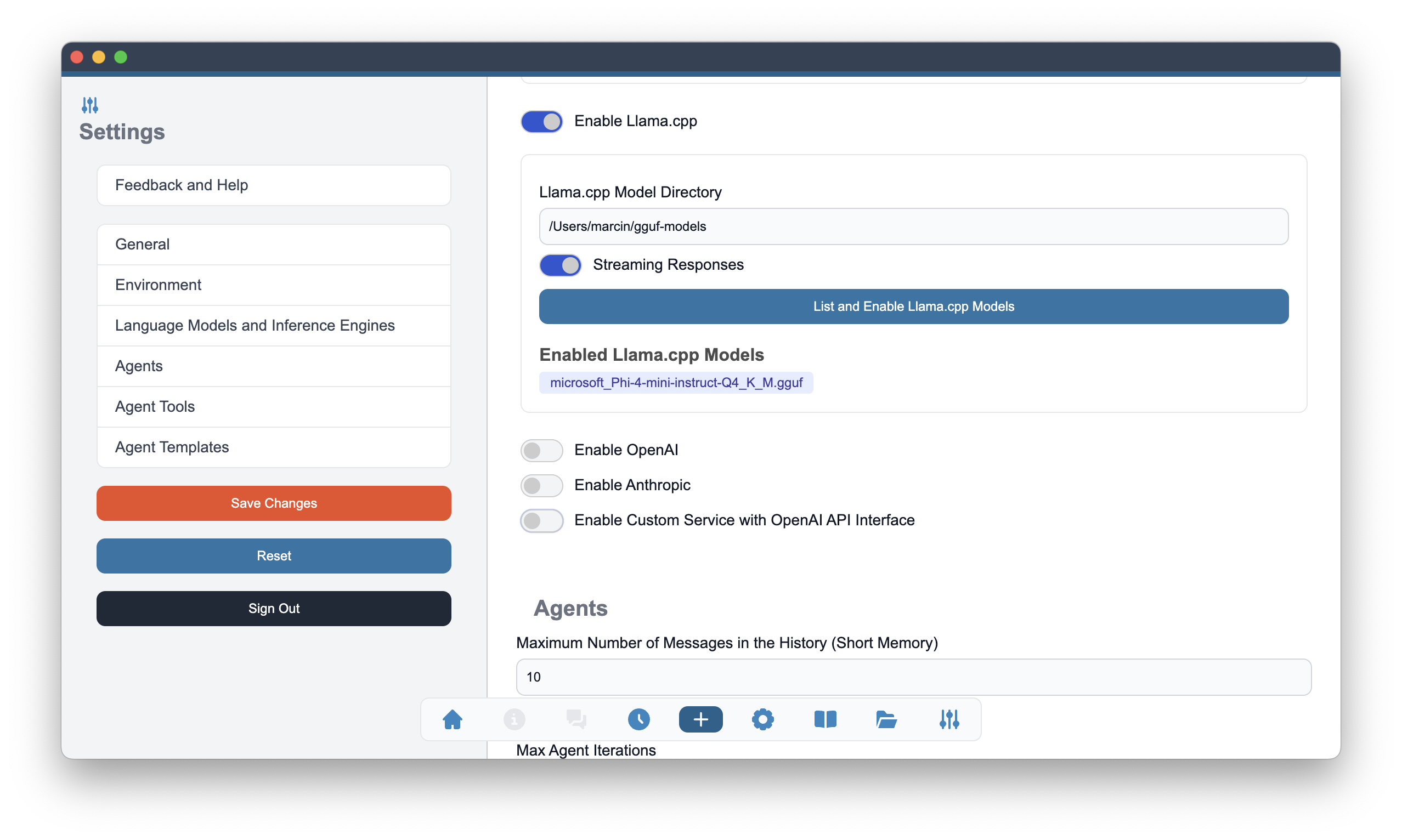

Llama.cpp

Llama.cpp is another local inference engine that allows you to run language models on your own machine. Llama.cpp is included in the Alchemist installation and does not require any additional installation steps.

To enable Llama.cpp, simply toggle the switch in the Alchemist settings. This will allow you to specify the model directory where the Llama.cpp models are stored. When you download a new GGUF model, place it in the selected location to make it available for Alchemist.

To enable Llama.cpp models in Alchemist, use the List and Enable Llama.cpp Models button in the Alchemist settings. This will display a list of all the models that are available in the selected directory. The listed models can now be selected by checking the boxes next to the models you want to enable.

Streaming Responses option can be enabled in the Llama.cpp settings. This will allow you to receive streaming responses from the Llama.cpp engine. Streaming responses can be useful for long-running tasks or when you want to display the output as it is being generated.

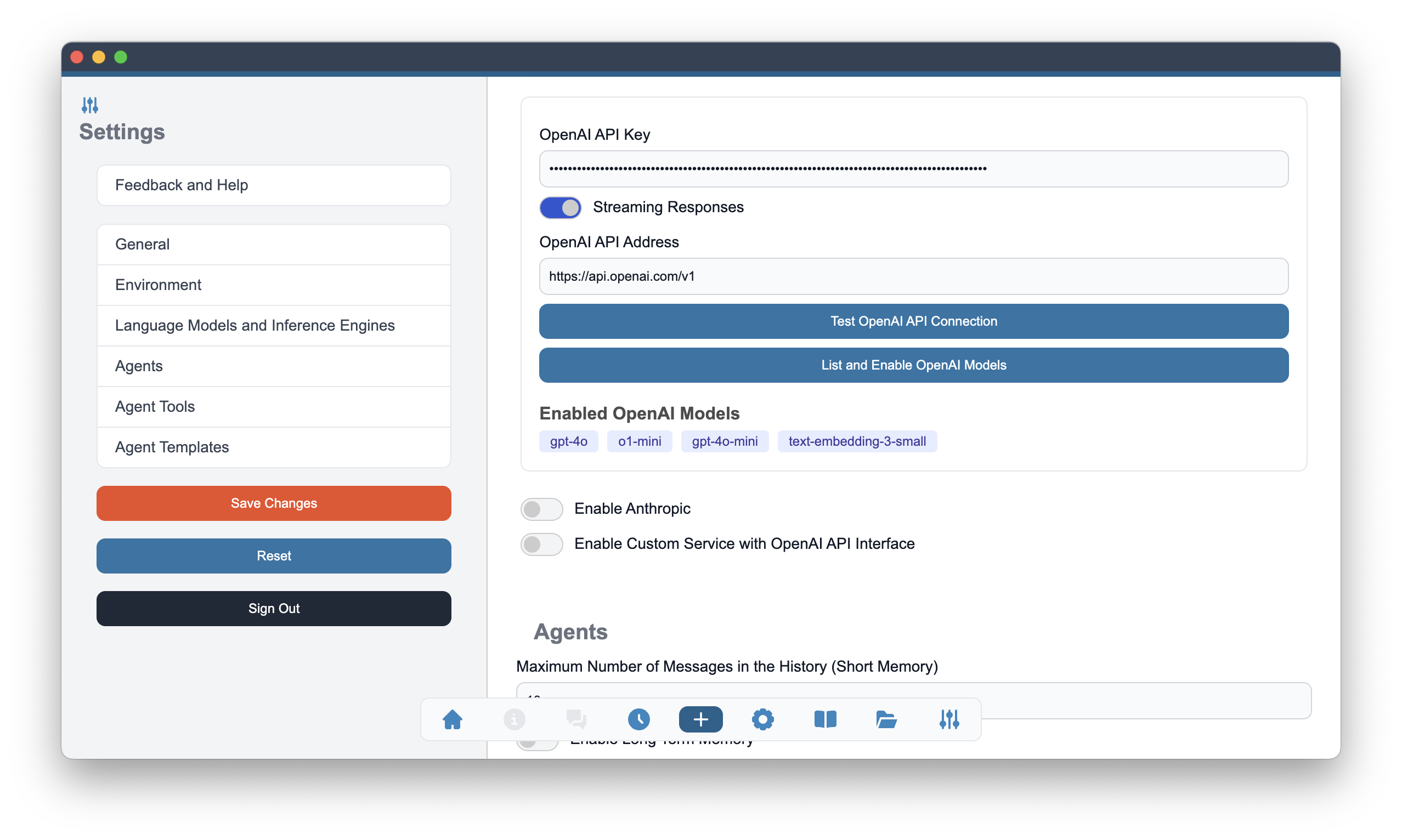

OpenAI

OpenAI is a remote API that allows you to access language models hosted by OpenAI. To use OpenAI, you need to create an account and obtain an API key. Once you have the API key, you can enable OpenAI in the Alchemist settings.

To create an OpenAI account, visit the OpenAI signup page. After creating an account, you can obtain your API key from the API keys page.

To enable OpenAI, toggle the switch in the Alchemist settings and enter your OpenAI API key. This will allow you to access the OpenAI models from Alchemist. The API key is used to authenticate your requests to the OpenAI API.

OpenAI API Address field is used to configure the connection to the OpenAI API. By default, the address is set to https://api.openai.com/v1.

Once OpenAI is enabled, you can check the connection to the OpenAI API using the Test OpenAI API Connection button in the Alchemist settings. This will verify that the API key is valid and that you can access the OpenAI models.

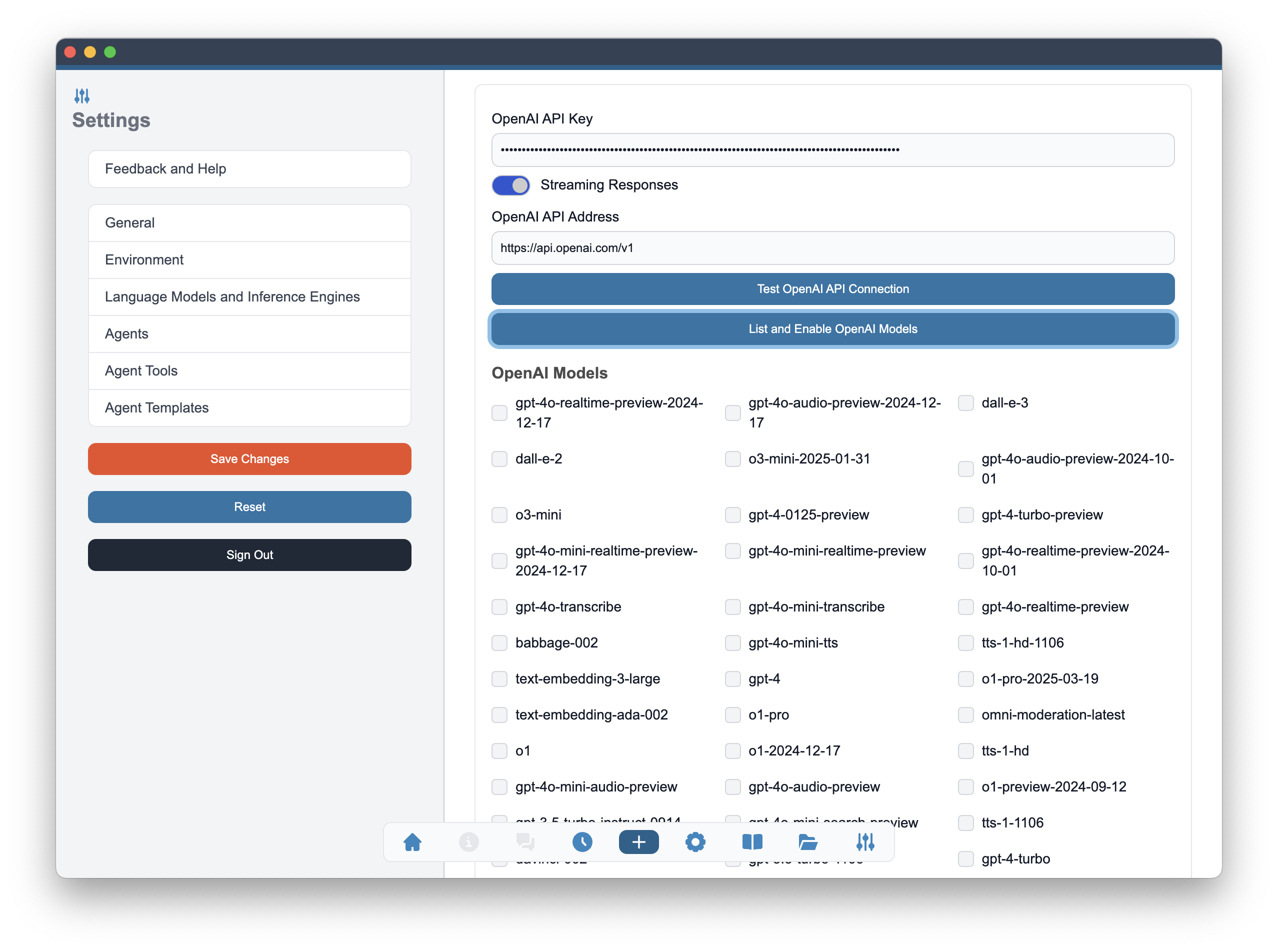

Once the connection is verified, you can list the models that are available in the OpenAI API. This will show you all the models that are available for use in Alchemist. OpenAI provides number of language models that vary in their capabilities and pricing. You can choose the model that best fits your needs.

To list the models, click on the List and Enable OpenAI Models button in the Alchemist settings. This will display a list of all the models that are available in the OpenAI API.

Once the models are listed, you can select the ones you want to use in Alchemist. This will allow you to customize the language models that are available for your agents.

To select the models, check the boxes next to the models you want to enable. You can enable multiple models.

Streaming Responses option can be enabled in the OpenAI settings. This will allow you to receive streaming responses from the OpenAI API.

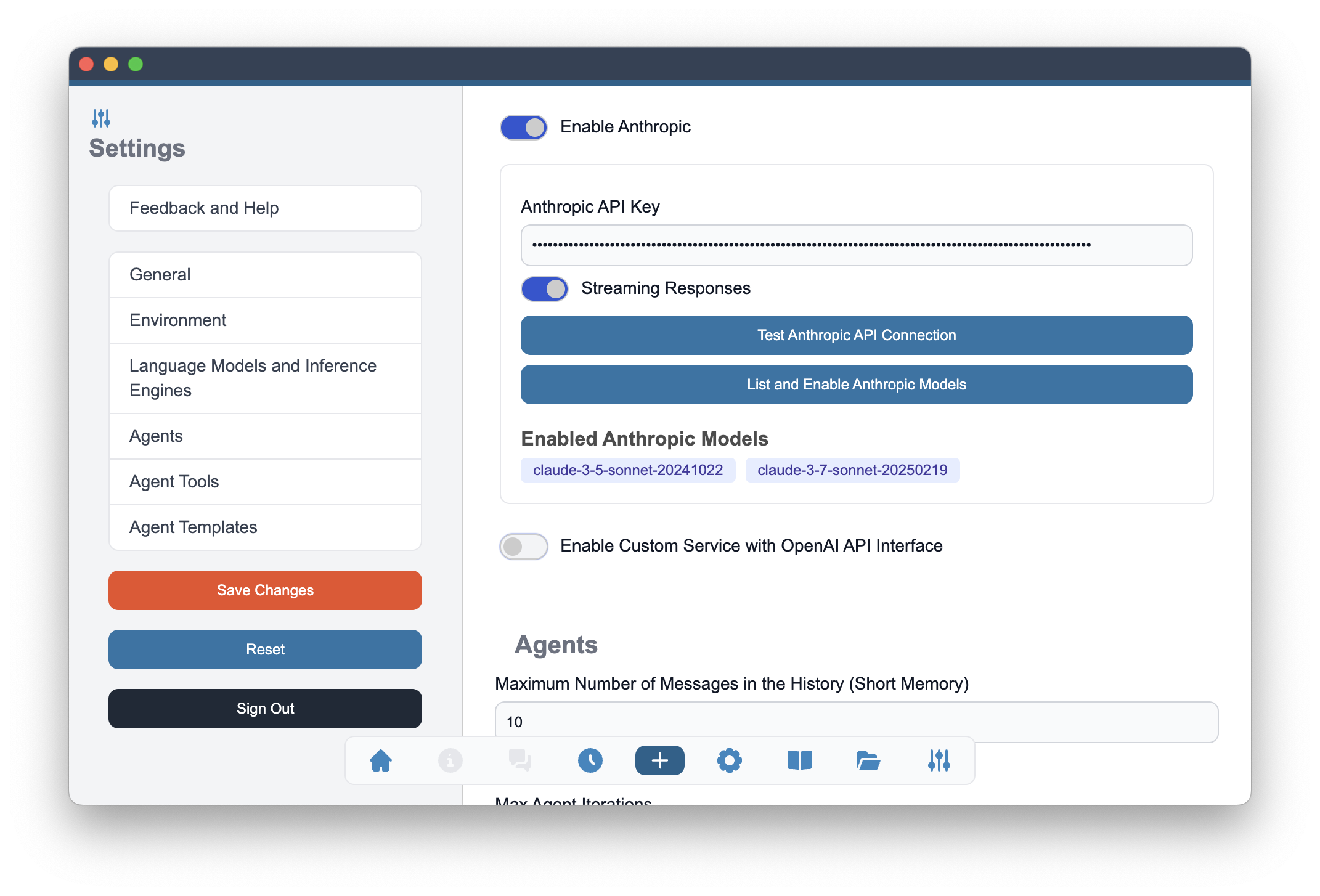

Anthropic

Anthropic is a remote API that allows you to access language models hosted by Anthropic. To use Anthropic, you need to create an account and obtain an API key. Once you have the API key, you can enable Anthropic in the Alchemist settings.

To create an Anthropic account, visit the Anthropic signup page. After creating an account, you can obtain your API key from the API keys page.

To enable Anthropic, toggle the switch in the Alchemist settings and enter your Anthropic API key. This will allow you to access the Anthropic models from Alchemist. The API key is used to authenticate your requests to the Anthropic API.

Test Anthropic Connection API button is used to verify that the API key is valid and that you can access the Anthropic models. This will check the connection to the Anthropic API. If the connection is successful, you will see a confirmation message and the Test Anthropic Connection API button will become green.

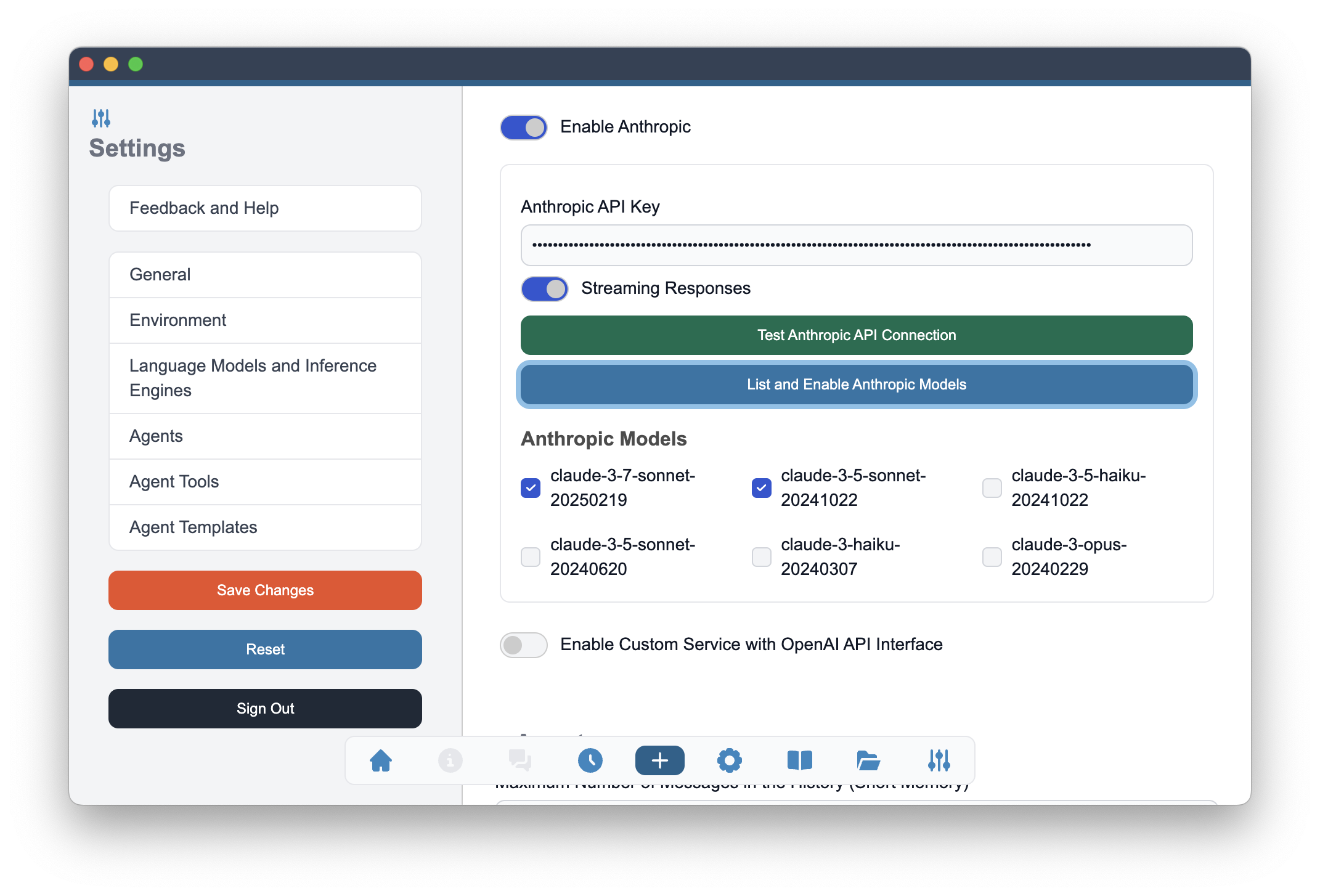

Once the connection is verified, you can list the models that are available in the Anthropic API. This will show you all the models that are available for use in Alchemist. Anthropic provides number of language models that vary in their capabilities and pricing. You can choose the model that best fits your needs.

To list the models, click on the List and Enable Anthropic Models button in the Alchemist settings. This will display a list of all the models that are available in the Anthropic API. Check the boxes next to the models you want to enable. You can enable multiple models.

Streaming Responses option can be enabled in the Anthropic settings. This will allow you to receive streaming responses from the Anthropic API.

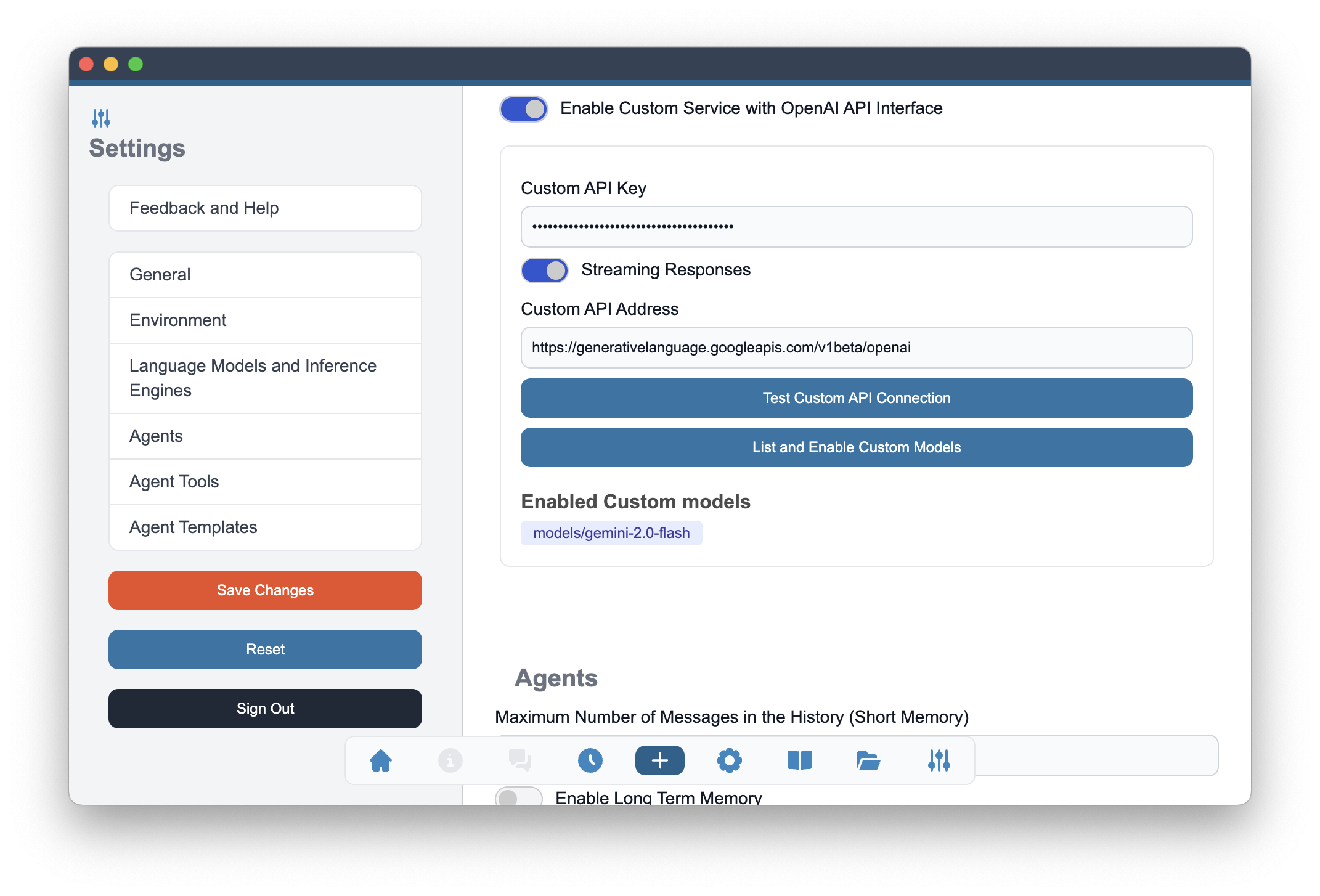

OpenAI compatible APIs

Many model providers expose OpenAI compatible interface in addition to their own. These interfaces are provided for example by Google for Gemini, xAI for Grok or by DeepSeek. The Custom Service with OpenAI API Interface allows you add those providers.

To enable OpenAI compatible APIs, toggle the switch in the Alchemist settings and enter your Custom API Key and Custom API Address. This will allow you to access the models from the selected provider.

To create an account, visit the provider's website and follow the instructions to obtain your API key. After creating an account, you can obtain your API key from the provider's API keys page.

Example displayed below shows configuration for Google Gemini API.

Use Test Custom API Connection and List Custom API Models buttons to verify the connection and to list and enable the models.

Once the connection is verified, you can list the models that are available in the selected API. This will show you all the models that are available for use in Alchemist. The listed models can now be selected by checking the boxes next to the models you want to enable.

You can enable Streaming Responses option in the Custom API settings. This will allow you to receive streaming responses from the selected API.

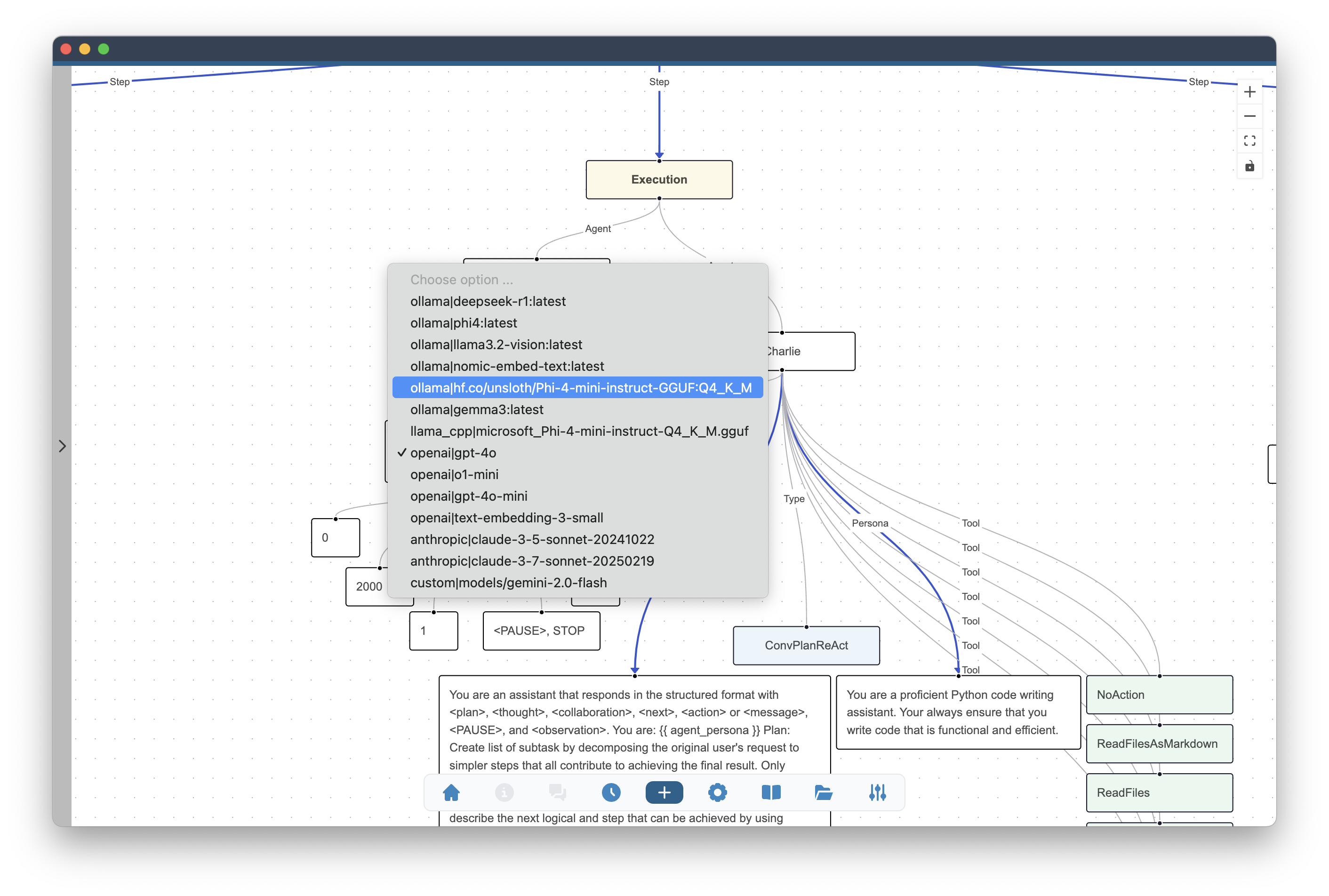

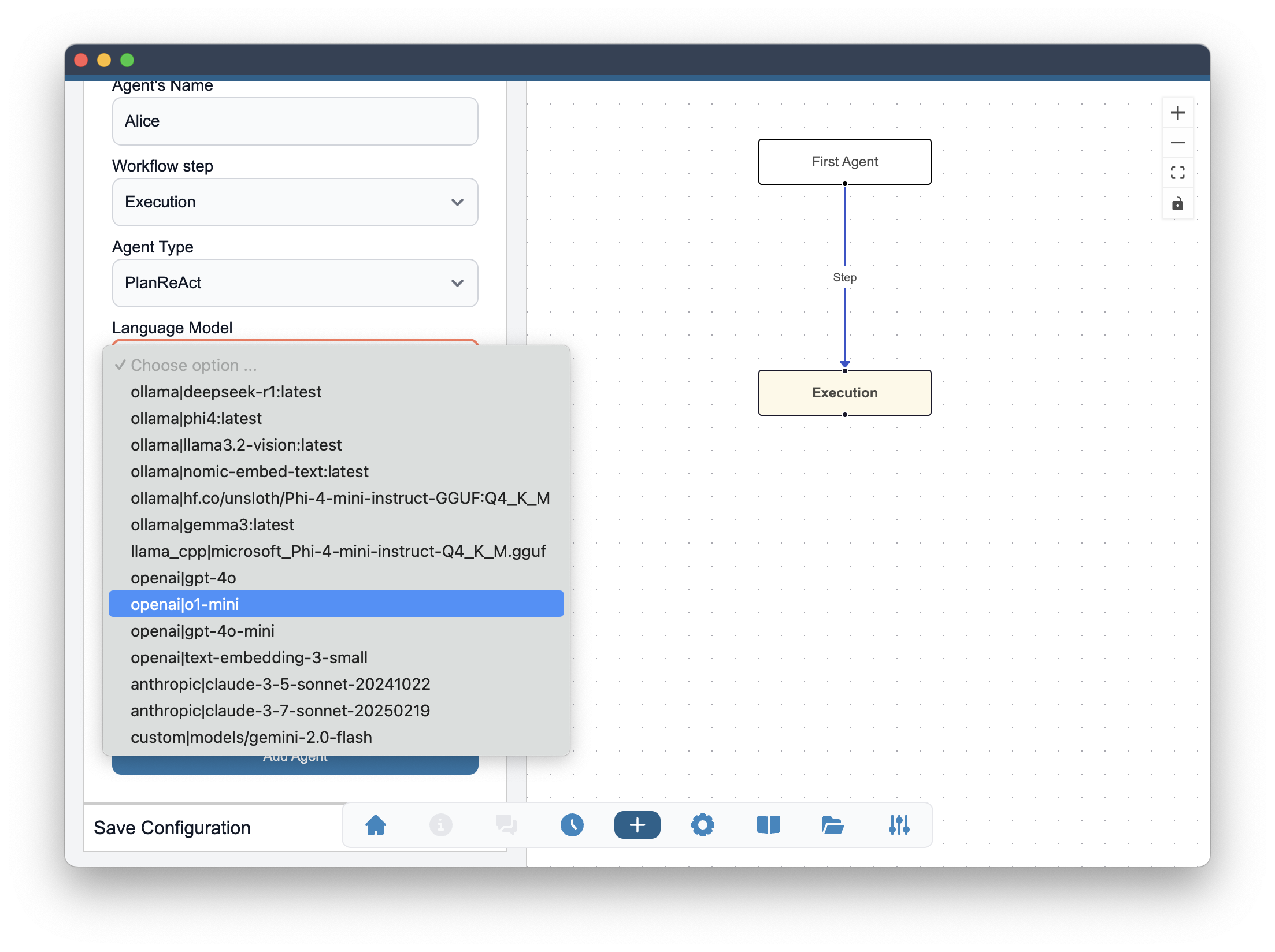

Selecting Language Model at an Agent Team Creation

When creating an agent team, you can select the language model you want to use for specific agent in that team. This allows you to customize the behavior of the agents based on the specific use case.

In the agent part of the agent team creation wizard you will be able to select a language model from the list of all enabled models. The Language Model select field will present you a complete list of models enabled in the Settings. Models will be shown in model_provider|model_name, for example ollama|llama:3.3 or anthropic|claude-3.7-sonnet-20250219. In this convention we can present all available models regardless of their original provider and choose between them seamlessly.

At the agent creation wizard, Temperature will be the only Language Model parameter that can be adjusted. All other parameters will be visible in the agent graph after adding the agent to the team.

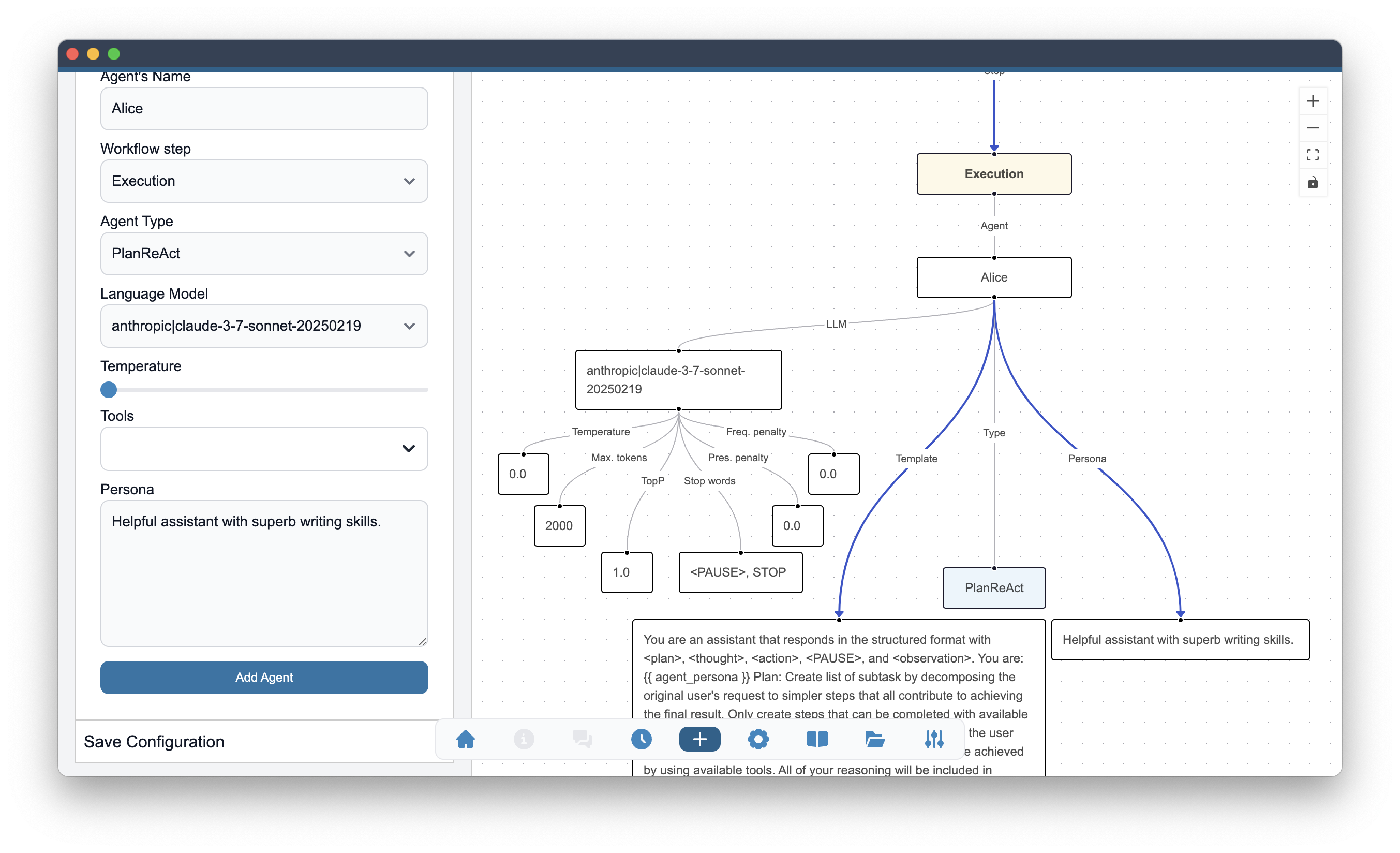

After agent has been added to the team with Add Agent button, it will become visible in the agent team graph on the right side of the window.

In the agent graph, you can see the selected language model for given agent. The model name is displayed in the dedicated node that is connected to the agent node (including the agent name) with LLM label. All parameters like (Temperature, Maximum number of tokens, Top P, etc.) are displayed as separate nodes connected to the Language Model node. All of the nodes are editable and after double click on a selected node, one is able to change the given value. All initial values are default values and can be adjusted in Settings.

Change of Language Model choice and parameters

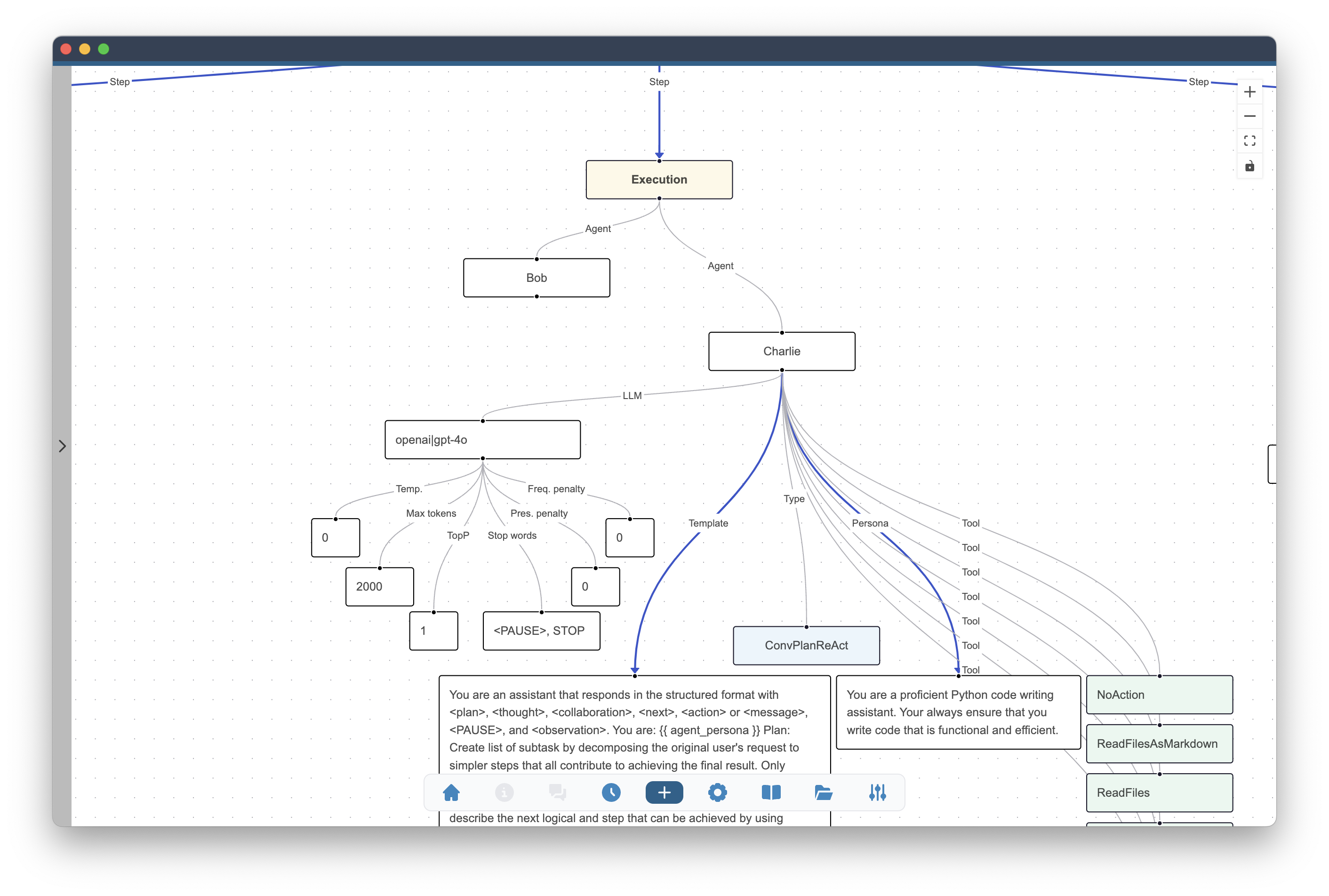

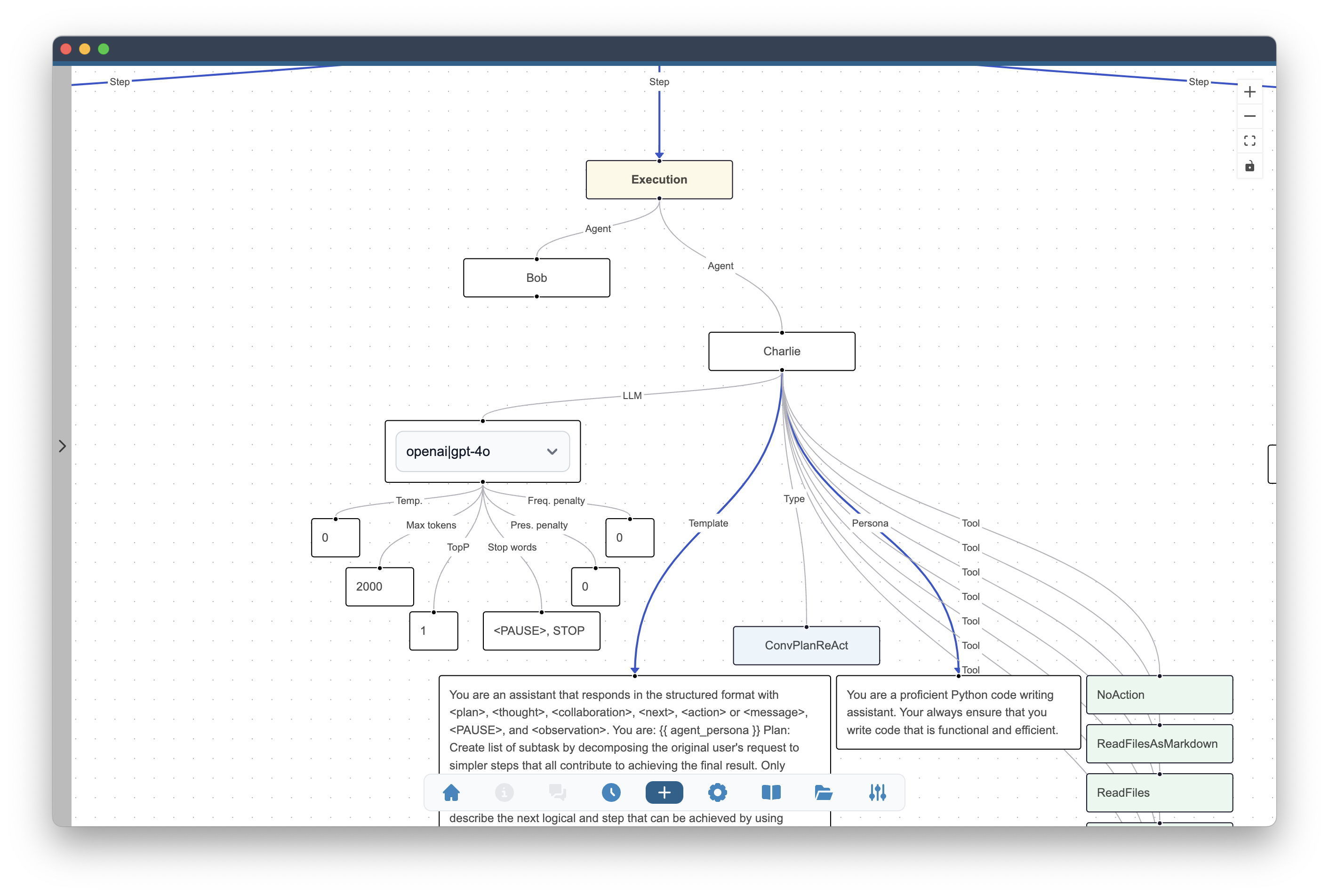

Language Model choice for a given agent can be changed after double click on the language model node in the agent graph. Language model node is represented by the name of model provider and model name. In the example below this node includes openai|gpt-4o.

After the double click a select field will be shown and the same list of models as in the wizard part will be displayed.

At this point different model can be selected for this agent. The remaining model parameters will remain the same unless they are edited in the subsequent steps. Attention is advised in particular to Maximum Number of Tokens (Max. tokens on the graph) since this value may differ between different models.