Enable embedding model

Embedding models are used to convert text into vector representations. This is useful for various tasks such as semantic search, clustering, and recommendation systems. In Alchemist, language models and embedding models are treated the same with respect to the model provider. This means that embedding models are enabled in the same way as language models.

Several embedding model providers are available in Alchemist, including:

- Ollama - List of available embedding models

- OpenAI - Embedding models documentation

To enable an embedding model, follow the same steps as you would for a language model. For more information on enabling language models, refer to the Enable Language Models section.

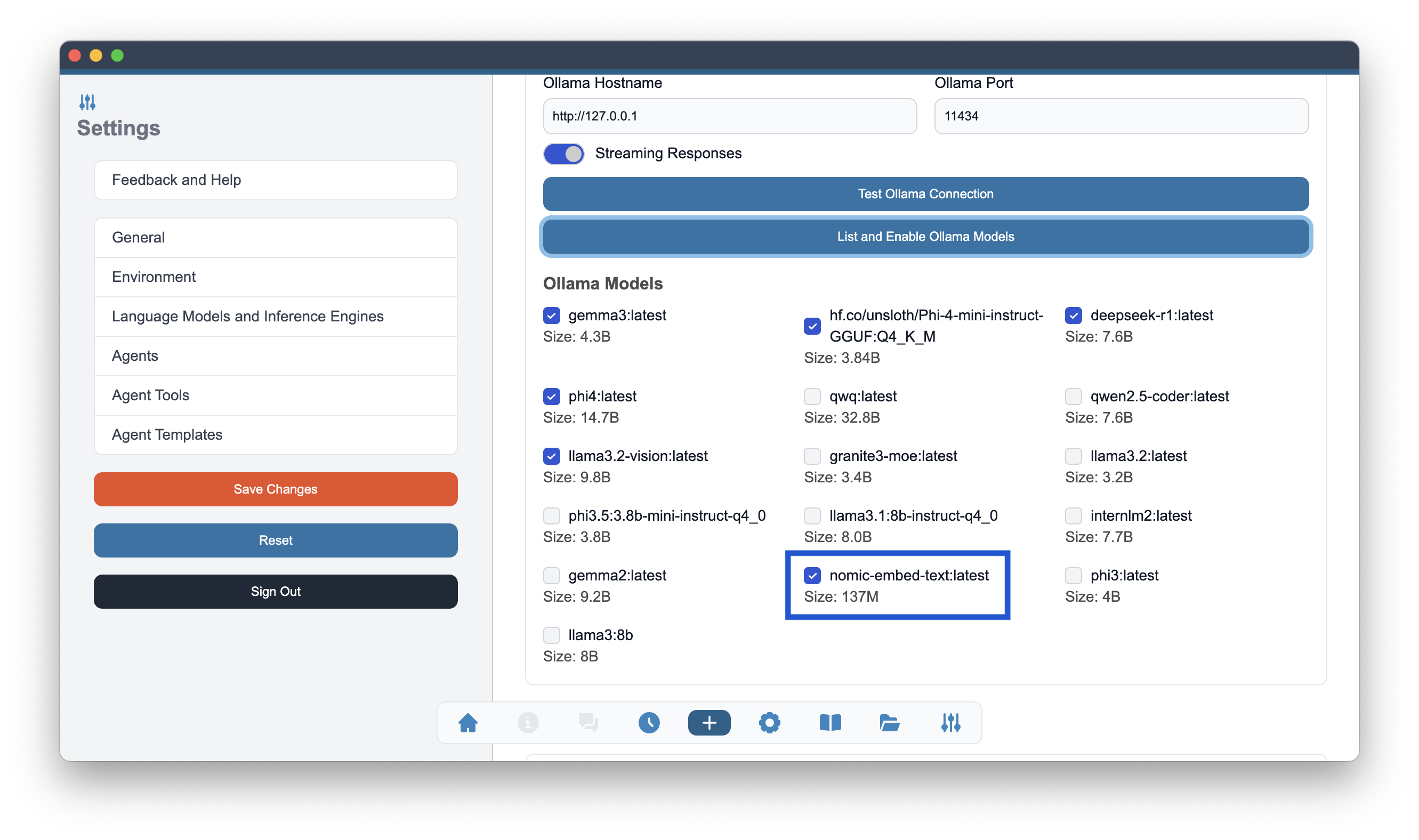

Ollama

To enable OpenAI embedding models, you need to select Enable Ollama option as model provider in Language Models and Inference Frameworks section of the Alchemist Settings. After that you will need to use pull an embedding model selected from the list of available embedding models and then using List and Enable Ollama Models button to enable one of these models in Alchemist.

nomic-embed-text model is highlighted with blue rectangle as an example of Ollama provided embeddings model.OpenAI

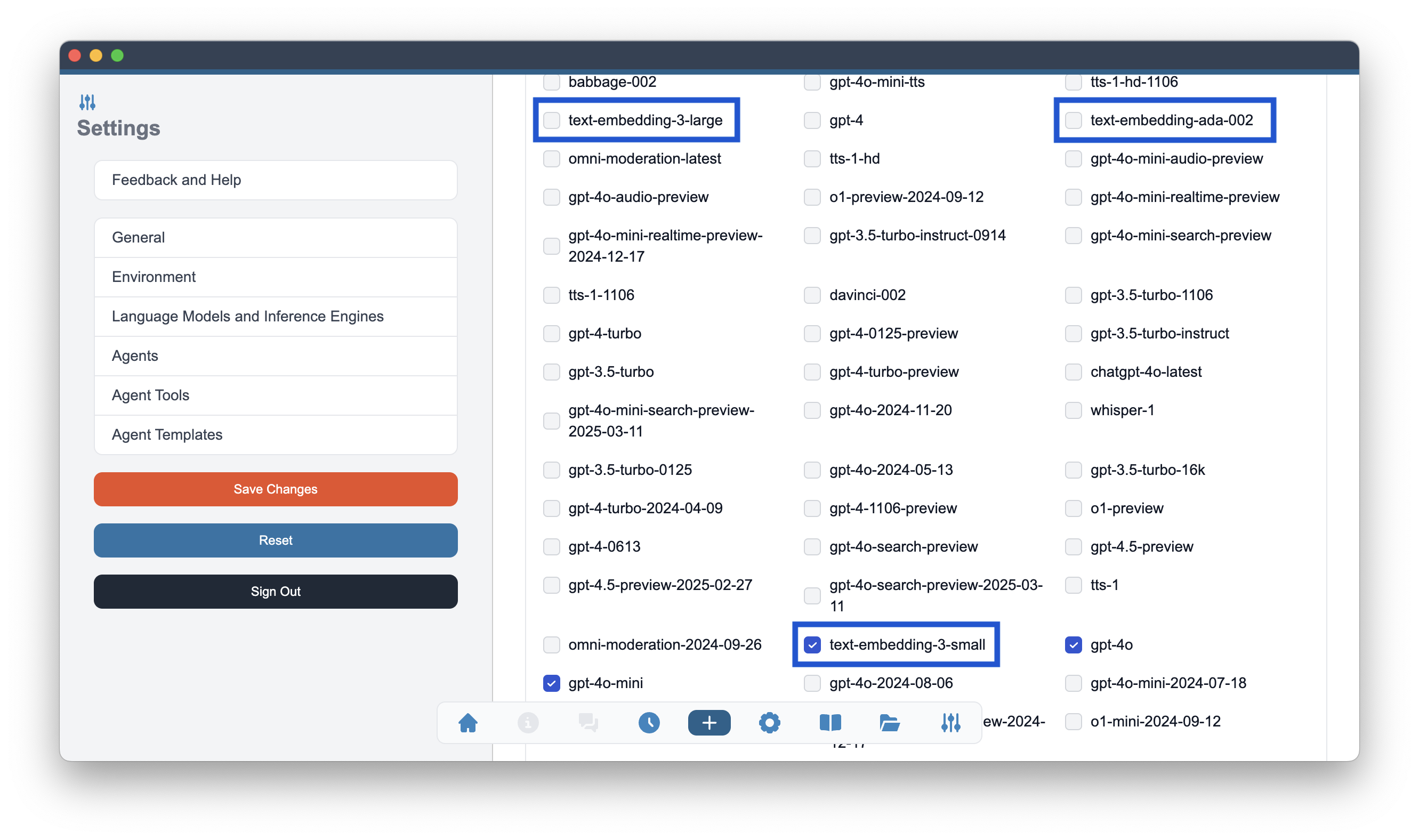

To enable OpenAI embedding models, you need to select Enable OpenAI option as model provider in Language Models and Inference Frameworks section of the Alchemist Settings. After that you will need to use List and Enable OpenAI Models button to select models.

All OpenAI embedding models follow the same naming convention and start with text-embedding- prefix.

text-embedding-ada-002, text-embedding-3-small and text-embedding-3-large. Enable one of them to use OpenAI embeddings model.Once the embedding model is enabled, you can use it for several tasks in Alchemist, such as semantic search in the internal Knowledge Base or long term memory of an agent.